Days 16–20: What It Means to Orchestrate

FAM! We’ve reached the end of another week, and this one was all about scaling up.

If you’ve been following along, you know we already laid the foundation: infrastructure deployed across AWS and GCP, managed with Pulumi + Ansible, and Atlas keeping track of the fleet. But the question now became: how do we actually orchestrate workloads across all of this?

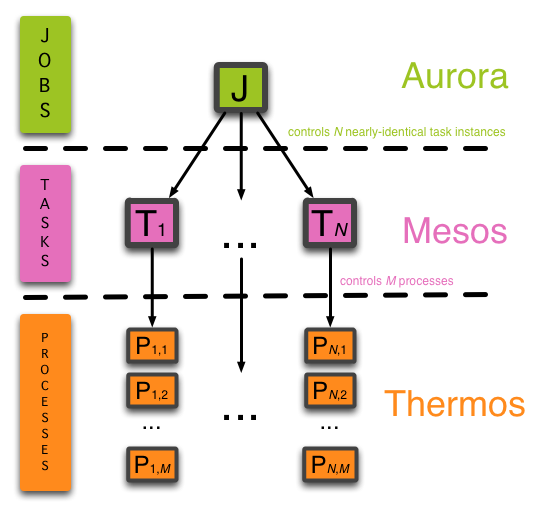

From Mesos + Aurora to Today

Back at Twitter, I led the SRE compute platform team that ran one of the world’s largest clusters. We used Apache Mesos as the “OS of the data center” and Apache Aurora as the scheduling engine. Together, they let us treat thousands of machines like one giant CPU—scheduling long-running services, cron jobs, batch processing, everything.

That system carried Twitter to hyperscale. But both Mesos and Aurora are now end-of-life. So, for this journey, I had to decide what comes next.

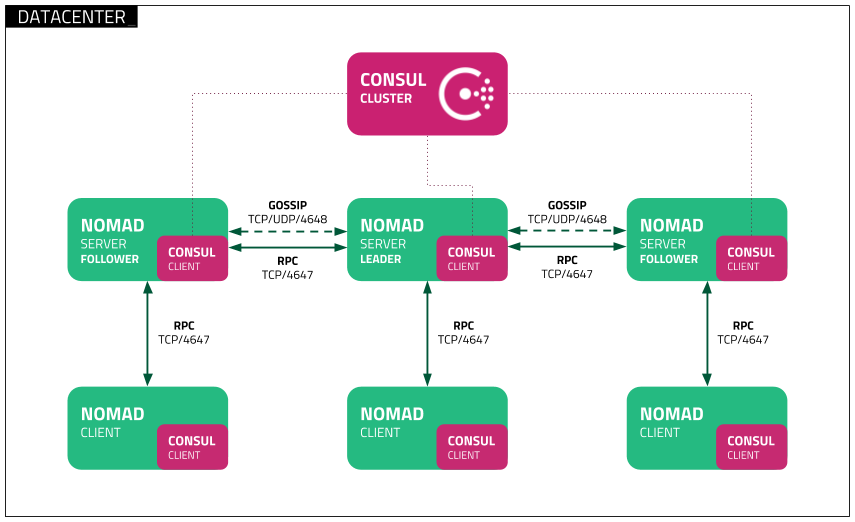

Choosing Nomad

The decision came down to Kubernetes vs Nomad. Kubernetes has the momentum, the ecosystem, the popularity. But I’ve been hands-on with hyperscale systems, and Kubernetes didn’t feel like the right fit for my vision. It was too complex, and honestly, too far removed from the simplicity I needed.

Nomad by HashiCorp clicked immediately. It carries the spirit of Mesos—simple, powerful, extensible—but with a modern design. Paired with Consul and other tools, it gives us a foundation to build a true orchestration layer for AI workloads.

So I gave the task to _Kodax (my AI junior engineer): stand up Nomad clusters across our infra. And just like that, our platform took its next step.

Global Clusters in 30 Days

Here’s what’s real right now:

A cluster running in AWS us-west-1

A cluster running in GCP africa-south1

Two orchestration systems, in two providers, on two continents. All within the first 30 days. All with AI writing 90%+ of the code.

Lessons From the Journey

What makes me proud isn’t just the infra—it’s the process. _Kodax builds the code. _Jules refines the structure. I mentor both, just like I would any engineering team. And at every step, I document in AGENT.md files to guide future work. That clarity is what lets AI scale with me.

We’ve gone from zero to a globally distributed orchestration platform in three weeks. And we’re only just beginning.

Next up…Hello World, the Muppy Way

—

👉 Catch up on earlier chapters at the bottom of this post to see how we got here.

Catch Up on Previous Chapters

Chapter 1 (Day 0): Let’s Talk About My Last 30 Days in Tech

Chapter 2 (Day 1): Whispering to AI – Mentoring _Kodax

Chapter 3 (Days 3–5): Specs, Standards, and the Birth of _Jules + _Kodax

Chapter 4 (Days 5–10): What a Week We’ve Had – Cloud as the New Edge

Chapter 5 (Days 11–15): Atlas Rising – Connecting Pulumi and Ansible

Chapter 6 (Days 16–20): Building a Global Cluster with AI